Leaked: New Multimodal AI and App Building Features Coming to Google Makersuite

Leaked: Multimodal AI, Instant App Building, and More Exciting Features Coming Soon to Google's Makersuite

Big changes are coming to Google's Makersuite platform, according to leaked screenshots I've obtained. Makersuite, currently in beta, allows anyone to access powerful AI models like PaLM and build their own apps and prototypes. But new unannounced features suggest Google has even bigger plans for Makersuite as a hub for multimodal AI and easy app development.

Two major new capabilities appear to be in the works:

1. Project Gemini - A multimodal AI model that can process text, images, and audio together.

2. Stubbs - A way to instantly generate AI-powered app prototypes with just a text prompt.

These new additions would dramatically expand Makersuite's capabilities and google's lead in generative AI. Let's break down what we know so far about each leaked feature:

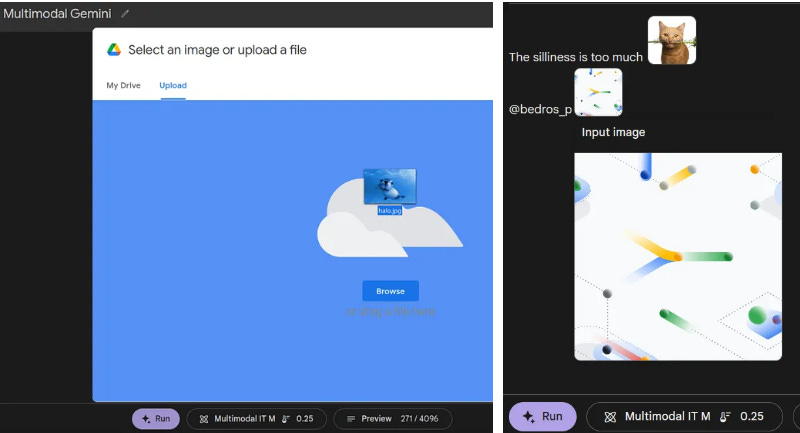

Project Gemini Brings Multimodal AI to Makersuite

The biggest reveal from the leaked screenshots is the addition of something codenamed "Project Gemini." This appears to be a new multimodal AI model created by DeepMind, Google's AI research division.

Multimodal AI can process and generate different modes of data like text, images, and audio together. This allows for more natural and capable AI systems. For example, an AI assistant with multimodal abilities could both understand a spoken question and generate a visual response.

Google's existing models like PaLM are focused on text. But Gemini would bring multimodal abilities to Makersuite users for the first time.

The screenshots show Gemini integrated directly into Makersuite's text and data prompts. Users can include images alongside text for Gemini to process together. The AI can then output HTML that includes both text and images in response.

This is a big step up from Makersuite's current text-only capabilities. It also has advantages over Google's new Bard chatbot, which is limited to text and simple image inputs. With Gemini, Makersuite will offer a more advanced playground for multimodal AI experiments.

Some key details about Project Gemini:

- Created by DeepMind, so likely based on state-of-the-art AI research.

- Codename is "Jetway", matching DeepMind's naming scheme.

- Supports text, images, and audio as inputs.

- Can output text, images, and HTML content together.

- Integrated directly into Makersuite prompts for easy use.

- Likely utilizing a model more powerful than PaLM or Bard.

This addition would give Makersuite users incredible new powers. Imagine creating a multimodal AI assistant that can interpret audio and images before responding in rich HTML. The possibilities are endless.

Google has not publicly announced Project Gemini at all yet. This leak provides the very first hints of its development and capabilities. If the screenshots are accurate, it could launch within Makersuite sometime this year.

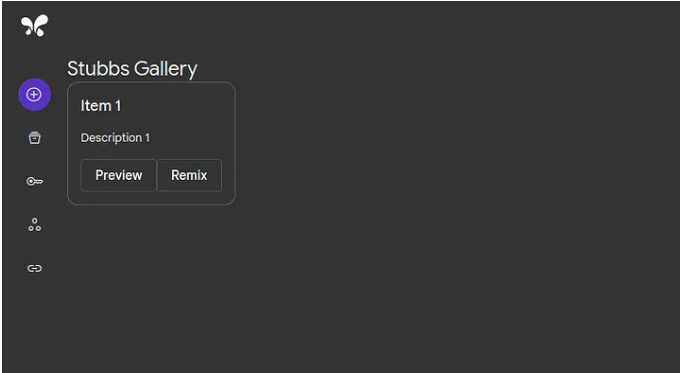

Stubbs Allow Instant App Prototyping with AI

The other major leaked feature is called Stubbs. This appears to let users instantly generate functional app prototypes without any coding.

Stubbs allows you to simply write a text prompt describing the app you want to build. Makersuite's AI will then generate the full front-end code for a working prototype.

The interface shown in the screenshots is very streamlined. You can deploy your Stub app with one click, then share or publish it. There's even a community gallery for finding and remixing Stubbs made by others.

This is an entirely new paradigm for basic app development. Rather than painstakingly mocking up app designs and flows, Stubbs lets you conjure a functioning prototype from text in seconds.

For simple app ideas, this could radically compress development timelines. Of course, Stubbs won't replace the need for custom backends or complex functionality. But it could become an immensely useful tool for mocking up UIs, testing ideas, and getting to a functional prototype faster.

Some key details about Stubbs:

- Generates front-end code for app prototypes from text prompts.

- Allows instantly deploying, sharing, and publishing Stubbs.

- Includes a community gallery for finding and remixing others' Stubbs.

- Uses a separate AI model called Text Bison rather than Gemini.

- Easy workflow for rapid prototyping without coding skills.

Again, Google has shared nothing publicly about this Stubbs capability yet. If implemented well, it could become a game-changer for quickly mocking up apps and prototypes.

Other Leaked Features and Limitations

Beyond Gemini and Stubbs, a few other enhancements appear to be in development:

- Built-in translation between languages without prompt limits.

- Autosaving for text prompts to prevent data loss.

However, some limitations were also noted:

- Stubbs will only produce front-end code, not full-stack apps.

- Gemini likely won't generate brand new images - just enhance text with existing images.

- Multimodal abilities limited to text and data prompts for now, not chat.

- GIFs not yet supported in the visual interface.

Overall though, these leaks reveal some incredibly ambitious additions coming to Makersuite. After launching just months ago, Google already seems poised to take Makersuite to the next level.

Gemini in particular looks set to leapfrog OpenAI and other competitors in multimodal AI capabilities. And Stubbs could lower the barrier for anyone to start building basic apps instantly.

These features are clearly still in development and could change before any public launch. But if Google can deliver on this vision, Makersuite may become the premiere destination for both using and building AI-powered apps.

The coming months promise to be very exciting as we (hopefully) see how these new innovations shape up! I'll be sure to keep you updated here with any official details as Google unveils Gemini, Stubbs, and more.

What do you think about these leaked additions to Makersuite? How might you use multimodal AI and instant app prototyping in your own projects? Let me know your thoughts and predictions!