OpenAI's GPT Store, Publisher Payments for AI Content, Mind Reading Tech, Robotics Advances, and More Futuristic News

OpenAI's GPT Store, Publisher Deals for AI Content, Mind Reading Demos, Robotics Breakthroughs, and More Futuristic Tech Unveiled

This week there's big news around OpenAI and their plans to open up a GPT store where people can sell custom AI assistants built on GPT. We also learned that tech giants like OpenAI and Apple are offering millions to news publishers for access to their content to train AI models, causing backlash.

Microsoft announced plans to add a dedicated Copilot key to keyboards to access their AI coding assistant with one button, Google's working on a premium version of Bard dubbed “Bard Advanced,” and Alibaba open sourced a powerful new image-to-video AI.

There were some interesting AI mind reading demos, big announcements around robotics research from companies like Google Deepmind, and even the US Supreme Court published a detailed report highlighting the need for the legal system to understand the benefits and risks of AI technology.

Read on for all the key details!

OpenAI to Launch GPT Store for Selling Custom AI Assistants

OpenAI CEO Sam Altman announced they will soon launch an online GPT store where anyone can build and sell custom GPT chatbots. Creators of popular GPTs could earn a share of OpenAI's subscription revenue. To prepare GPTs for the store, you'll need approval from OpenAI to ensure they meet content policies. An interesting aspect is that GPT builders may have to provide customer support for issues users have with their assistants.

While allowing people to monetize AI creations opens new opportunities, having to manage user issues yourself may limit interest. But for those that build truly unique and helpful assistants, it could become a lucrative business.

OpenAI and Apple Offering Millions for Publisher Content, Irking Some

With AI models relying on vast training data to function properly, tech companies are paying publishers for rights to their articles. However, the amounts - OpenAI offering between $1-5 million and Apple offering at least $50 million over multiple years – aren't satisfying some major publishers like The New York Times.

The core issue is that if AI models can produce articles, reports, and content that competes with or replaces publishers, they stand to lose far more revenue than these deals provide. At the same time, quality content still needs human creators and reporting behind it, so there needs to be adequate incentives.

There's no easy solution here. Paywalls and ads are increasingly frustrating for consumers versus just asking an AI model. Ultimately, the news industry will likely need to adapt their business models for the age of AI.

Microsoft Adding Dedicated Copilot Key to Keyboards

Microsoft announced plans to add a dedicated “Copilot” key to future keyboards, making it easy to instantly access their AI coding assistant. The Copilot key will have their familiar icon and pull up the Copilot pane normally accessed by clicking the button near the Windows search bar.

While not the flashiest announcement, it shows Microsoft is all-in on integrating AI capabilities directly into their products. It allows developers to easily take advantage of Copilot's auto-complete suggestions as they write code. Look for more AI helper functions to be similarly streamlined and accessible going forward across Windows and other Microsoft products.

Google Working on “Bard Advanced” Version of Chatbot

Google engineer Dylan Rousell tweeted that Google will soon offer a premium version of their conversational AI agent Bard, dubbed “Bard Advanced,” as part of their Google One paid plans. This will likely grant access to their more powerful Gen AI system used to wow people with demos about planning a friend's wedding in their launch event (before it served up some incorrect information on the James Webb telescope in response to another prompt, causing Google to lose $100 billion in market cap as their stock plunged).

No further launch details were given, but offering advanced capabilities to paying members could be smart until their AI systems mature more. There will certainly be demand from some users for early experiences with powerful systems, even if occasional mistakes still slip through.

Alibaba Open Sources Image to Video AI “i2v Gen-XL”

This week, Chinese e-commerce leader Alibaba open sourced an image-to-video generation model called “i2v Gen-XL” capable of higher resolution outputs and longer videos than previous tools. Unlike text-to-video models, it still requires an image input to generate a moving video scene. Some examples show realistic and complex video segments several seconds long.

As most existing solutions like Runway, Pikatok, and Stable Diffusion require paid tiers or enterprise pricing, having an open source option for high-quality video generation is an exciting step. I haven’t tested it out myself yet, but plan to share results if I can get it running properly. Could open up creative possibilities if reasonably accessible to individuals.

DeepMind Shares Latest Robotics Research

Google's DeepMind laid out some of their latest research focused on improving robot training efficiency using large language models, self-supervised learning approaches, and automatically generating outlines of desired motions from video demonstrations. Together, these aim to help robots better generalize knowledge to new situations.

Given the hefty computational power required for such models, optimizations that reduce complex calculations are important to one day field useful home or office robots. We also covered an impressive new general purpose robot from researchers in California that can be fully built with off-the-shelf parts for $32k and programmed open source. I expect 2024 to show massive strides in robot capabilities.

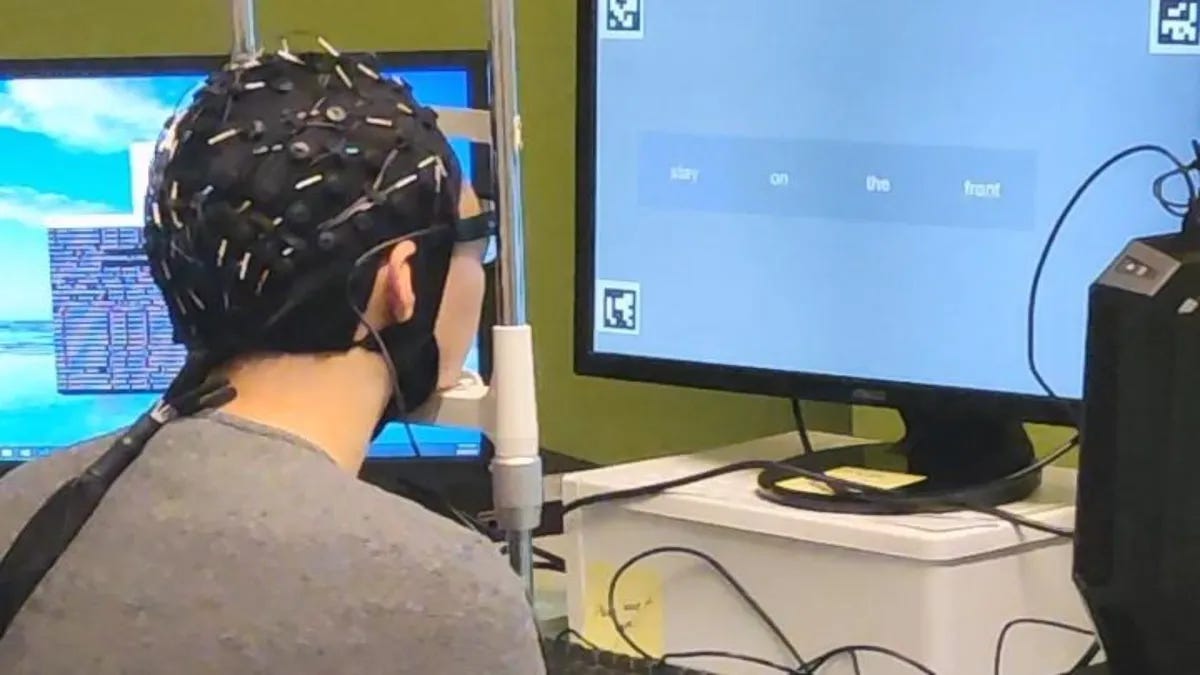

Mind Reading Demo Shows AI Thought Transcription

Montreal-based startup Whisper released a demo of mind reading technology that wearers can use to silently speak full sentences just using thought. It relies on an electroencephalography (EEG) brainwave scanning cap to detect neurological signals that an AI model translates into written words on a screen (and speech via a voice synthesizer).

It's very early stage and less seamless than Neuralink’s anticipated brain implant interfaces, but being able to articulate thoughts could help those unable to speak communicate. The video shows some lag after thinking a sentence before it's displayed, but still – being able to get machines to interpret imagined speech is a huge step towards truly seamless human-computer connections.

US Supreme Court Publishes Guidelines for Using AI

The US Supreme Court published an extensive end-of-year report focusing in large part on how the federal judiciary should embrace artificial intelligence to streamline and improve processes, while better understanding its limitations in areas fundamentally requiring human nuance and trust - notably reaching verdicts.

They cite problematic examples of overly relying on pattern matching algorithms versus contextual clues: “In at least nine cases, lawyers have presented past cases that turned out not to exist at all – the arguments were hallucinated by AIs.” Ensuring human oversight on decisions impacting people's rights or lives remains essential, even as AI looks to transform areas like legal research.

It’s a pretty astute analysis of not getting swept away in the hype cycle, but rather applying these powerful tools judiciously. We need more measured consideration of AI’s pros and cons from regulators.