The AI Arms Race Heats Up: New Language Models and Chips Unveiled

The Race for AI Supremacy: New Language Models, Chips, and the Battle for the Future of Technology

The tech world was abuzz this week with a flurry of major AI-related announcements, further intensifying the race to develop the most capable and advanced language models and hardware. The epicenter of the action was Google's Cloud Next conference in Las Vegas, where the search giant unveiled a slew of new AI offerings. But Google was far from alone, as OpenAI, Anthropic, Meta, Intel, and others also made significant AI-powered moves.

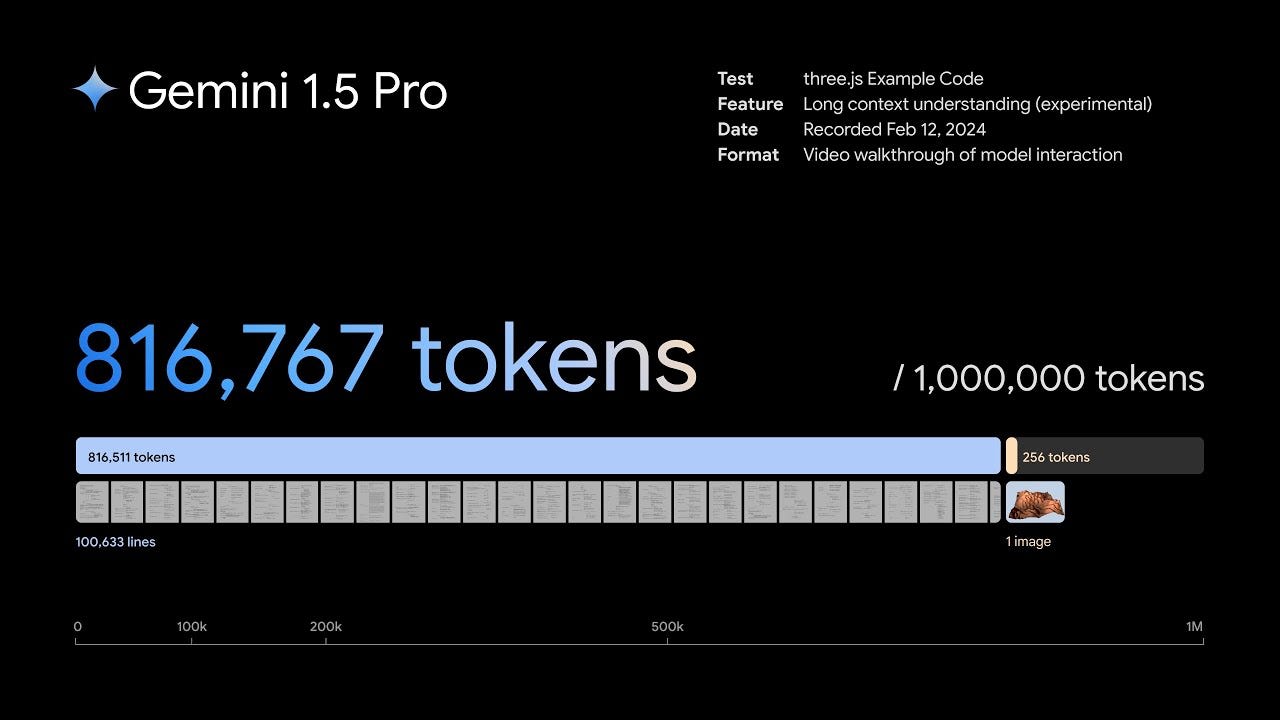

Gemini 1.5 Reaches Broader Availability

The marquee announcement from Google was the broader rollout of its impressive Gemini 1.5 language model. Gemini 1.5, which was previously in limited availability, is now accessible via the API in over 180 countries. This model boasts an industry-leading 1 million token context window, meaning it can draw upon a vast trove of 750,000 words' worth of information to inform its outputs.

One early adopter showcasing Gemini 1.5's capabilities is AI researcher Bill Hibbard of Anthropic. Hibbard demonstrated how he used the model to automatically generate video summaries, key takeaways, and attention-grabbing YouTube titles and thumbnails from just a raw audio file. The model's ability to accurately timestamp and summarize content is particularly noteworthy, as this has been a persistent challenge for other language models like GPT-4.

OpenAI Unleashes an Upgraded GPT-4

Not to be outdone, OpenAI also unveiled an updated version of its vaunted GPT-4 model. While details remain scarce, the company touted that this latest iteration boasts significant improvements in areas like coding and mathematics. According to the ChatbotArena rankings, the new GPT-4 Turbo model has surpassed Anthropic's Claude as the most capable language model currently available.

This GPT-4 update comes on the heels of OpenAI's previous announcement about training the model on over 1 million hours of YouTube videos - a move that drew criticism from YouTube's CEO for potentially violating the platform's policies. It remains to be seen how this expanded data diet will impact GPT-4's outputs and capabilities.

A Tidal Wave of Open-Source Models

The open-source AI landscape also saw major activity, with several new large language models hitting the scene. Stability AI released Stable LM 2, a 12 billion parameter model that reportedly outperforms the previous Mixl 8X 7B. However, Stability's decision to require a paid membership for commercial use has drawn some skepticism about the model's true "open-source" nature.

Perhaps the most intriguing open-source development came from Anthropic spinoff Mistral, which surreptitiously dropped a massive 22 billion parameter model called Mixr 8X 22B. Distributed via a public torrent link with minimal fanfare, this model boasts an expansive 65,000 token context window. While details are scarce, early indications suggest Mixr 8X 22B could be a formidable challenger to the top closed-source models.

Google also expanded its own open-source Gemma lineup, introducing specialized versions tuned for coding and research. These models aim to provide more efficient and task-specific alternatives to the more general-purpose Gemma base model.

Finally, Meta is expected to soon release LLaMA 3, which is projected to rival the capabilities of GPT-4 while remaining openly available for anyone to use and build upon. This could provide a major counterweight to the dominance of OpenAI and other closed-source giants.

The Chip War Rages On

Alongside the language model developments, a parallel arms race is unfolding in the realm of AI hardware. Companies are fiercely competing to wrest control of the critical chip technology that powers these advanced models, reducing their reliance on NVIDIA's industry-standard GPUs.

At the Google Cloud Next event, the company unveiled its own Tensor Processing Unit (TPU) called Tensor System Axon, which it claims offers superior performance and efficiency compared to NVIDIA's offerings. Intel also joined the fray, introducing its Gaudi 3 AI chip, which boasts 40% better power efficiency than NVIDIA's H100 GPU.

Not to be outdone, Meta announced the second generation of its proprietary AI training and inference accelerator, the MTI, which it says delivers 3x the performance of the previous iteration. These in-house chip developments underscore the tech giants' determination to wrest control of the AI hardware landscape from NVIDIA's dominant position.

However, NVIDIA remains firmly entrenched, having recently announced its next-generation Blackwell chips, which are reportedly 4 times more powerful than the current H100 standard. The industry's relentless pursuit of faster, more efficient AI hardware is a clear testament to the pivotal role these chips play in driving progress in the field.

Advances in AI-Generated Media

The Google Cloud Next conference also showcased some intriguing advancements in the realm of AI-generated media. One highlight was the unveiling of Imagen 2, Google's answer to OpenAI's DALL-E and Adobe's Firefly. While similar to those tools in generating static images from text prompts, Imagen 2 adds the capability to produce short, looping animations - a feature that could prove useful for creating GIFs and other dynamic visual content.

Another noteworthy announcement was Google Vids, a new AI-powered video generation tool that can transform text scripts into PowerPoint-esque presentation-style videos. Though still in development, this tool points to the growing ability of AI to automate the creation of polished multimedia content.

Separately, researchers introduced "MagicTime," an open-source AI model that specializes in generating high-quality time-lapse videos from text prompts. This specialized approach demonstrates the potential for AI to streamline the production of certain types of video content.

These advances in AI-generated media highlight the technology's rapidly evolving capabilities, which will undoubtedly have profound implications for content creation, marketing, and visual storytelling in the years to come.

The Debate over AI Training Data Continues

Amidst the flurry of new model and hardware announcements, the ongoing debate over AI training data has also intensified. Last week, YouTube's CEO Neil Mohan warned that if OpenAI had indeed trained on YouTube videos without permission, it would constitute a clear violation of the platform's policies.

Now, a new congressional bill has been introduced that aims to force AI companies to disclose the copyrighted material used to train their models - a move that could have significant implications for industry leaders like Google, Microsoft, and Meta. While the bill's ultimate fate remains uncertain, it underscores the growing scrutiny and calls for transparency around AI training data.

In contrast, Adobe has taken a more proactive approach, offering to pay creators between $3 and $7 per minute of video content to use in training its own large language models. This "data-for-dollars" model could become a template for how AI companies acquire training data in the future, particularly if legislation forces greater disclosure.

Meta, meanwhile, has announced that it will use its own AI-powered detection systems to identify when users upload images created by AI tools like its own Emu. This represents an effort to get ahead of the growing challenge of distinguishing AI-generated content from human-created media.

The ongoing tensions and evolving strategies surrounding AI training data highlight the complex and often contentious landscape that technology companies must navigate as they race to develop ever-more capable models.

The AGI Debate Rages On

Beyond the concrete product announcements, the broader existential questions surrounding artificial general intelligence (AGI) continue to be a topic of intense debate and speculation.

Tesla and SpaceX CEO Elon Musk made waves this week by predicting that AI will surpass human-level intelligence within the next year or two. However, this assertion was promptly challenged by Meta's chief AI scientist Yann LeCun, who expressed skepticism that current large language models will ever achieve true AGI.

LeCun believes that Meta's ongoing work on "Viper," a new self-supervised learning architecture, holds more promise for eventually reaching human-level intelligence. This divergence of views underscores the fundamental uncertainty and disagreement that still pervades the AGI discourse.

The long-awaited Humanize wearable device, billed as a potential precursor to AGI-powered "AI assistants," also made its debut this week to decidedly mixed reviews. Early adopters have panned the $700 device, which requires a $24 monthly subscription, as clunky, impractical, and lacking in compelling use cases.

These contrasting developments - the bold AGI predictions, the skepticism of leading experts, and the lukewarm reception of a supposedly transformative AI product - illustrate the complex and multifaceted nature of the quest for artificial general intelligence. The path ahead remains fraught with both excitement and uncertainty.

The AI Art Boom Continues

In a final note, the AI art generation craze shows no signs of slowing down. One particularly striking example emerged this week, where a card game developer reportedly paid an AI-assisted artist a staggering $90,000 to generate the artwork for their game.

While the term "AI artist" may be a misnomer, as the human artist still played a crucial role in refining and polishing the AI-generated imagery, this case underscores the growing commercial viability of AI-powered visual content creation. As more creators and businesses harness these tools, the ripple effects on the creative economy will undoubtedly continue to reverberate.

Conclusion

The past week has been a whirlwind in the world of artificial intelligence, with major announcements, heated debates, and glimpses of the transformative potential - as well as the current limitations - of AI technology. From the rollout of advanced language models to the intensifying chip wars and the ongoing discussions around AGI and AI-generated media, the pace of innovation and the complexity of the challenges facing the industry are both clearly on the rise.

As these technological developments unfold, the need for clear-eyed analysis, ethical considerations, and a balanced understanding of both the promises and perils of AI only becomes more pressing. The Next Wave podcast, launched in partnership with HubSpot, aims to provide a forum for such in-depth discussions, delving into the implications and long-term trajectories of these transformative technologies.

Ultimately, the AI landscape is evolving at a dizzying pace, and the next chapter of this story is sure to be just as captivating as the one we've witnessed this week. Stay tuned for more as the great AI arms race marches on.