The AI Newsletter: Exciting Developments in Artificial Intelligence

The Latest in AI - Stable Diffusion 3, Grok 1.5, and Exciting Announcements from Google, Microsoft, and More

Welcome to the latest edition of our AI newsletter, where we cover the most exciting developments happening in artificial intelligence. The past couple weeks have seen some major announcements that will shape the future of AI, so let's dive right in!

Stable Diffusion 3 - The Next Generation of AI Art

One of the biggest stories was the announcement of Stable Diffusion 3 by Stability AI. This new version promises significant improvements in image quality, adherence to prompts, and spelling abilities.

Some example images show impressively coherent artworks with detailed prompts like "an astronaut on the moon wearing a tutu with a pink umbrella riding a pig with a bird wearing a top hat." The words "Stable Diffusion" even appear cleanly generated in the image itself.

With the open nature of Stable Diffusion, users have been free to generate any image imaginable. However, Stability AI says version 3 will introduce "safeguards" to encourage responsible use. It remains to be seen if these affect the creative freedom users have come to expect.

The potential is certainly exciting. Stability AI's CEO revealed the architecture can support video generation given sufficient compute power. This could eventually produce Sora-like results at a fraction of the cost. For now, version 3 shows AI art continues advancing rapidly.

Google's Gemini Stumbles Historically

While Stable Diffusion pushes boundaries in AI art, Google's new Gemini model faced some public stumbles. When prompted to generate historical images, it added modern diversity rather than accurately depicting the past.

For example, asking for "Australian woman" produced results with various ethnicities rather than just Caucasian given Australia's historical demographics. Other prompts for medieval knights, Vikings, and founding fathers also ignored historical accuracy.

This highlighted the challenges of training AI without relevant context and nuance around ethnicity, culture and time periods. Google quickly disabled image generation capabilities until improvements are made.

It exemplified the importance of thoughtfully curating training data so AI models don't inadvertently perpetuateharmful stereotypes. There is clearly more work to do, but responsible development is critical as these models become more prevalent.

Grok 1.5 Coming Soon from Anthropic

While Gemini sorts out its historical miscues, Anthropic's Grok model continues progressing. Grok 1.5 will release in about two weeks with some intriguing new capabilities.

One is "Grok Analyze" which can summarize entire Twitter threads to deduce the core truth. Grok will also help users craft posts by providing AI assistance through a new interface button.

Collaboration between Grok and Midjourney also appears likely. Anthropic's CEO Elon Musk confirmed discussions are happening to potentially enable Midjourney's image generation in Grok.

This partnership would combine Grok's reasoning with Midjourney's artistic capabilities. While details are still scarce, Grok 1.5 should reveal more when it launches soon.

groq - An AI Chip for Lightning Fast Processing

In addition to Anthropic's large language model Grok, another company named groq (with a q) made waves by unveiling their AI processor chip.

This "lpu" (Language Processing Unit) specializes in inference rather than training. By optimizing chips specifically for executing trained models, groq enables phenomenally fast response times.

Their demo showcasing real-time conversation with an AI assistant impressed many. The AI generated coherent sentences in under 1 second, highlighting the power of purpose-built AI hardware.

As conversational AI becomes more ubiquitous, reducing lag will provide much more natural interactions. Huge inference speeds without compromising output quality unlocks many use cases. Expect groq's technology to enable next-generation AI applications.

OpenAI Rolls Out ChatGPT Feedback Feature

In official ChatGPT news, OpenAI launched a new feedback feature to rate community-created bots. Browsing the GPT Index now shows star ratings and review counts for alternatives like Anthropic's Claude and Wolfram Alpha.

Providing user feedback helps identify the most useful bots. It also incentivizes creators to build bots solving problems and not just chasing clicks.

This small addition brings helpful transparency so users can better evaluate bots. Allowing ratings directly within the official ChatGPT interface was an astute move by OpenAI to improve the ecosystem.

Reddit Partnership Enables AI Training Breakthroughs

OpenAI makes headlines training models on massive datasets, but accessing quality data remains a major bottleneck. That's why Reddit potentially allowing companies to train AI on its content provoked much intrigue.

Last week's rumor became reality when Google confirmed an expanded partnership with Reddit. Google Cloud now has access to Reddit data to understand content patterns and user interactions.

With over 100,000 active communities covering every imaginable topic, Reddit offers invaluable training data diversity. Models trained on Reddit's eclectic mix of news, memes, and discussions could unlock new breakthroughs.

But ethical concerns around personal data use will require thoughtful implementation. Regardless, it exemplifies the hungry demand for high-quality data as companies race to train ever-smarter AI.

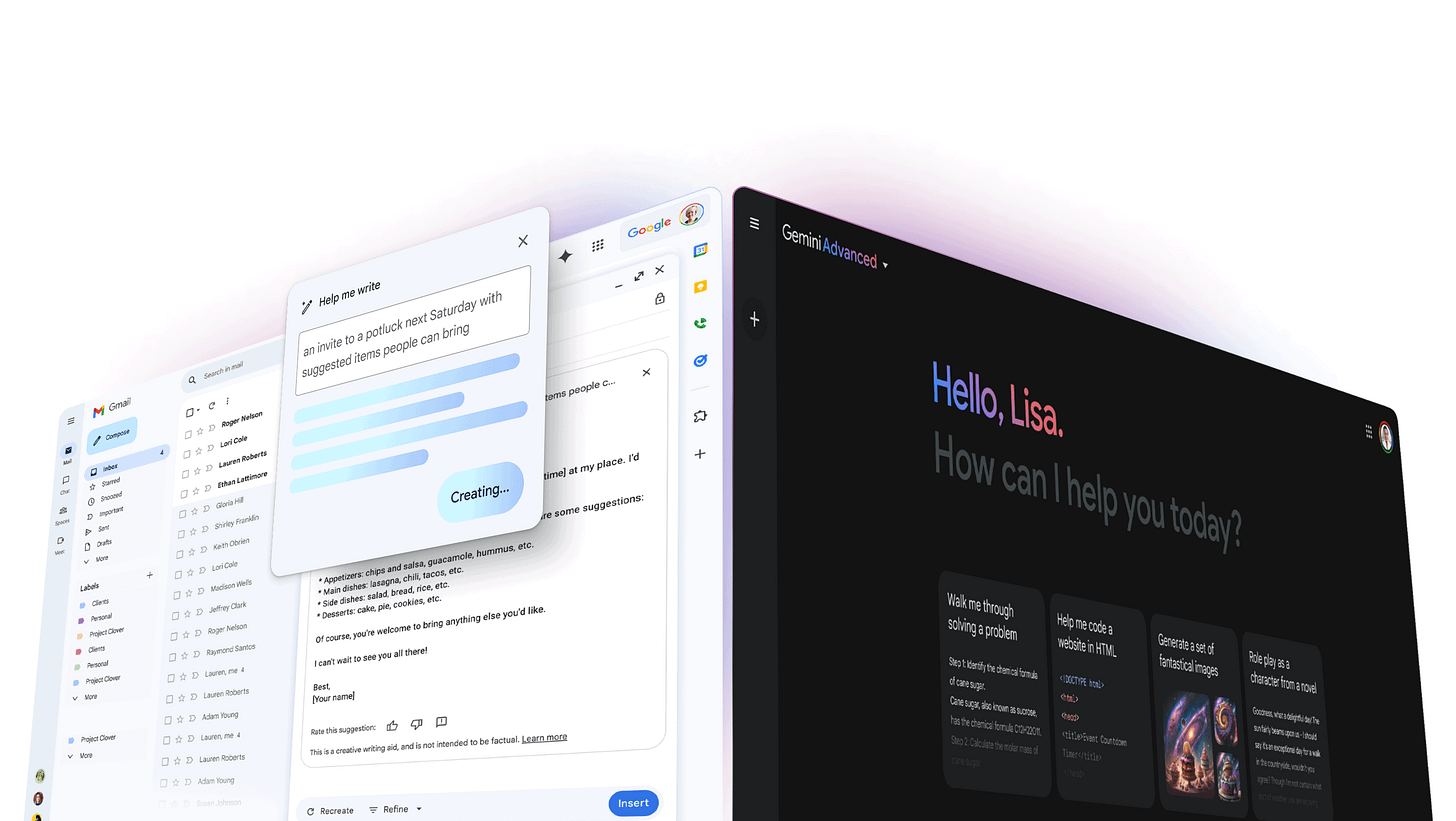

Google Brings Gemini to Gmail, Docs and Chrome

Speaking of Google, they incorporated Gemini directly into Gmail, Google Docs and Chrome - demonstrating their aggressive AI integration vision.

Gmail now offers a "Help me write" button enabling Gemini-powered autocomplete suggestions. Google Docs also utilizes Gemini to spice up your writing.

Chrome recently launched an AI writing assistant for compatible websites. By right clicking in text fields, you can access Gemini to help formulate content.

However, some users experienced bugs with Chrome's rollout. When functioning properly, the seamless integration provides another touchpoint for Gemini's capabilities.

Between acquiring shares in Anthropic, partnering with Reddit, and expanding Gemini access, Google is clearly all-in on AI. They aim to thoroughly permeate their products with the technology.

Google Open-Sources New 76B Parameter AI Model

In one of their most unexpected moves yet, Google open-sourced a massive 76 billion parameter AI model called Gemma. This represents an exponential increase beyond previous open-sourced models.

Google claims Gemma shows stronger reasoning ability and mathematical logic than existing open models like Anthropic's LLaMA. However, early testing reveals sub-par performance, especially for more complex prompts.

Nonetheless, providing free access to models with hundreds of billions of parameters accelerates research dramatically. Instead of relying solely on limited closed models, scientists can probe AI fundamentals more thoroughly.

Gemma's deficiencies also exemplify the need for rigorous testing of claimed capabilities, rather than simply parameter counts. There is no substitute for empirical evidence.

Adobe Forms New AI Video and Animation Team

Shifting focus to a different creative field, Adobe formed a new research team focused on generative video and animation called CAA.

Their charter spans exploring tools to reinvent video editing and animation authoring with the help of AI. This likely responds to groundbreaking models like OpenAI's Sora demonstrating AI's untapped potential beyond images.

With Adobe's expertise and resources in creative software, they may emerge as a leader in user-friendly AI creation. More computational power enables assisting professionals rather than replacing them.

Integrating AI seamlessly into existing workflows could win over creators hesitant to adopt entirely new paradigms. If Adobe executes well, they are poised to dominate AI-enhanced creative media.

Sora Coming to GitHub Copilot, But Timeline Uncertain

Speaking of Sora, Microsoft confirmed the intriguing video generator will integrate into GitHub Copilot eventually. But they caution it will take substantial time to productize.

Copilot's code autocompletion already impresses developers, especially when trained on their own code. Adding Sora's ability to generate animations from text could create an extremely potent programming assistant.

However, huge obstacles remain around compute requirements, cost and practical utility. Copilot servers would need massive GPU capacity to run Sora models, which likely prohibits near-term feasibility.

Nonetheless, the vision illustrates AI's potential to not just assist, but collaborate in creative work. The future of human-AI partnerships remains thrilling to ponder.

Viral AI-Generated Videos of Will Smith

On a lighter note, Will Smith went viral once again due to AI - but this time deliberately. He released a series of absurd videos showing him exaggeratedly eating spaghetti.

The over-the-top silly green screen videos parody AI-generated footage of Smith that circulated last year. Many speculated the new videos themselves were created using advanced models like Sora.

However, they originated from Smith directly poking fun at the technology. It exemplified AI's ongoing influence in entertainment and pop culture.

As video generation improves, distinguishing real from artificial will become increasingly challenging. Until then, humor remains one of the most potent ways to make sense of AI's disorienting capabilities.

Your own personal army of bots, continuously producing VIDEO CONTENT across all social media platforms.

Generating TRAFFIC, LEADS, and attracting CLIENTS, all for YOU!

ElevenLabs Audio Effects Add Immersion to AI Videos

Speaking of synthesized video, AI startup ElevenLabs showcased a new audio effects feature to augment generated footage. Users can input text like "ocean waves" and the model will produce corresponding sound effects.

Adding believable audio greatly increases video immersion. While entirely simulated, the enhanced realism provided by complementary sights and sounds is impressive.

ElevenLabs also revealed they were selected for Disney's accelerator program, signalling interest in using AI voices and other synthetic media. Generative audio could enable studios to cheaply scale content localization and customization.

Overall, ElevenLabs continues pushing boundaries on creative AI applications. Audio effects on generated video hint at a future where immersive media is produced almost entirely artificially.

Opusclip 3.0 - Faster AI Video Summary Tool

Another generative video tool called Opusclip launched version 3.0 of their AI video summarization app. It excels at digesting long videos into engaging short social media clips.

The upgrade claims to better identify viral moments using AI. It also introduces AI-generated b-roll to make clips more dynamic. Additional improvements include faster performance and caption flexibility.

Opusclip simplifies repurposing existing video content for different formats. Automatic clipping assisted by AI could remake media workflows. But appropriately handling copyrighted material remains tricky.

Rapid summarization also risks losing important nuance, although responsibly applied it provides another avenue to wider distribution. As online video skyrockets, AI-assisted editing tools like Opusclip will become essential.

Suno 3.0 Generates AI Music 2x Faster

Shifting to audio generation, Suno released version 3 of their AI music creation app. It has improved audio quality, expressiveness, and 2 minute song lengths.

Tested samples sound crisp at the 2 minute duration, with clear lyrics and transitions. Instrumentals now separate vocals cleanly. The tool also remembers progress, enabling iterative refinement.

Musicians initially feared AI would make them obsolete. But tools like Suno actually offer new creative canvases. AI can help unlock writer's block and provide musical inspiration on demand.

Democratizing music creation to anyone with an idea could yield wonderful diversity. As with other generative art, human curation and judgment remains critical for quality. But AI promises to augment human creativity rather than replace it.

US Government Hires Chief AI Officer

With AI's capabilities growing daily, governance and ethics are crucial. That's why the US Department of Justice appointing Princeton professor Dr. Jonathan Mayer as its first Chief AI Officer made waves.

Dr. Mayer will coordinate use of AI technologies throughout the department while advising on responsible development. With AI increasingly used in areas like predictive policing, ensuring fairness and transparency is vital.

Widespread deployment of still-unproven systems risks amplifying biases and over-automation. Thoughtful oversight mechanisms are essential to steer AI's impact toward justice rather than injustice.

Hopefully this role signifies greater appreciation of AI's risks if mismanaged. All sectors of society require collective wisdom so these powerful technologies promote progress for all.