The AI Revolution: From Adobe's Creative Breakthroughs to Emotion-Tracking Smart Glasses

Exploring the Cutting Edge: How AI is Reshaping Creativity, Productivity, and Human Interaction

In the ever-evolving landscape of artificial intelligence, recent developments have set the stage for groundbreaking changes across multiple industries. From Adobe's innovative AI-powered tools to emotion-tracking smart glasses, the future of technology is being shaped by intelligent systems that promise to enhance creativity, productivity, and even our understanding of human emotions. In this comprehensive newsletter, we'll explore the latest advancements in AI technology and their potential impact on various sectors.

Adobe's AI Revolution: Firefly Video and Creative Suite Updates

Adobe, a leader in creative software, has unveiled a series of AI-powered features that are set to transform the way we approach video and image editing. At the forefront of these innovations is Adobe Firefly Video, a generative AI model that allows users to create videos from text prompts.

Adobe Firefly Video: Ethical AI-Generated Content

Adobe Firefly Video represents a significant leap forward in video generation technology. Users can simply type a text prompt, and the AI generates a corresponding video. While the results shown at Adobe Max were likely cherry-picked to showcase the best outputs, the quality of the generated videos appears impressive.

One of the most notable aspects of Adobe Firefly Video is the company's claim to ethical AI development. Adobe states that the model was trained exclusively on licensed content from Adobe Stock and public domain material. This approach aims to address concerns about the use of copyrighted content in AI training datasets. However, it's worth noting that Adobe Stock itself includes some AI-generated images, which adds a layer of complexity to the ethical considerations.

Although full access to Adobe Firefly Video is not yet available, Adobe has introduced a feature called "generative extend" in Adobe Premiere. This tool allows video editors to stretch and extend existing footage, filling gaps with AI-generated content. This feature promises to be incredibly useful for video editors who need to adjust clip lengths or create seamless transitions.

Adobe Photoshop AI Upgrades

Adobe Photoshop, the industry-standard image editing software, has received significant AI enhancements. New features include:

1. Distraction Removal: An AI-powered tool that can identify and remove unwanted elements like wires, cables, and people from images with a single click.

2. Generative Workspace: A new interface for AI-assisted image creation and editing.

3. Generative Fill, Expand, Similar, and Background: These tools, powered by the new Adobe Firefly 3 Model, allow for more advanced image manipulation and generation.

The generative workspace in Photoshop enables users to create complex scenes and product photography setups using text prompts. This feature could revolutionize the way product photographers and graphic designers approach their work.

### Adobe Lightroom AI Upgrades

Adobe Lightroom, the popular photo editing and organization software, has also received AI enhancements:

1. Quick Actions: AI-powered one-click adjustments for sky enhancement, skin retouching, and color improvements.

2. Generative Remove: A feature that allows users to remove unwanted elements from photos directly within Lightroom, without needing to switch to Photoshop.

These updates to Adobe's Creative Suite demonstrate the company's commitment to integrating AI throughout its product lineup, potentially changing the workflows of creative professionals across various industries.

Adobe's Experimental AI Projects

During Adobe Max, the company also showcased several experimental AI projects that hint at the future of creative software. These "sneak peeks" included some truly impressive technologies:

Project Perfect Blend

This project demonstrates advanced AI-powered image compositing. Users can place subjects from different photos into a single scene, and the AI will automatically adjust lighting, shadows, and color to create a seamless blend. The technology even adapts to changing backgrounds, relighting the entire scene accordingly.

Project Clean Machine

Aimed at improving astrophotography, Project Clean Machine can remove unwanted flashes and artifacts from star lapse videos, resulting in cleaner and more professional-looking footage.

Project HiFi

Similar to Nvidia Canvas, Project HiFi allows users to create detailed room designs by drawing simple shapes. The AI interprets these shapes and generates realistic 3D objects that can be easily manipulated within the scene.

Project Scenic

This tool combines text prompts with 3D object manipulation to create complex scenes. Users can generate a basic 3D layout, adjust object positions and camera angles, and then have the AI render a photorealistic image based on the composition.

Project RemixALot

Designed for Adobe Illustrator, this project enables users to create mockups of designs like flyers and then generate variations in different sizes and styles. It can even adapt designs to match the style of reference images, potentially saving graphic designers significant time in creating multi-format campaigns.

Project SuperSonic

SuperSonic is an AI-powered audio generation tool that can create sound effects and music to match video footage. By analyzing the visual content and following text prompts, it generates audio that synchronizes perfectly with the on-screen action.

Project Turntable

Perhaps the most mind-blowing demonstration, Project Turntable allows users to rotate 2D vector shapes as if they were 3D objects. This technology could revolutionize character animation and illustration workflows by eliminating the need to redraw characters from different angles.

While these projects are still in the experimental phase, they offer a glimpse into the potential future of creative software, where AI acts as a powerful assistant to human creativity.

Kyber Super Studio: Unifying AI Creative Tools

Kyber has launched its new Super Studio, a platform that integrates multiple AI models for image and video generation. The tool incorporates Luma Labs' Dream Machine, Black Force Labs' Flux, and Kyber's own image and video models into a single interface.

Super Studio features a canvas-based workflow that allows users to connect different AI modules visually, similar to node-based compositing software but with a more user-friendly approach. This system enables creators to generate, modify, and combine AI-generated content in a flexible and intuitive manner.

The platform supports various creative tasks, including:

- Image generation and manipulation

- Video creation with keyframe controls

- Style transfer between images

- Video restyling

- Image upscaling

- Profile picture and logo customization

By bringing together multiple AI tools in one place, Kyber Super Studio aims to streamline the creative process for digital artists, marketers, and content creators.

Krea AI: One-Stop Shop for AI Video Generation

Krea AI has taken integration a step further by incorporating multiple video generation models into a single platform. Users with a Krea account can now create videos using models from Minimax, Luma Labs, Runway, P labs, and Cling.

This consolidation of video generation tools allows creators to experiment with different AI models without switching between multiple platforms. Krea provides estimated generation times for each model, helping users choose the best option for their specific needs and time constraints.

Suno Scenes: AI Music Generation from Images

Suno, known for its AI music generation capabilities, has introduced a new feature called Scenes. This mobile app-based tool creates unique songs inspired by photos or videos. Users can simply take a picture or upload an existing image, and Suno will generate a song that reflects the content and mood of the visual input.

While users can provide additional context or specify a musical style, the AI is capable of interpreting the image on its own and creating fitting music. This technology opens up new possibilities for content creators, allowing them to quickly generate custom soundtracks for their visual content.

HeyGen Interactive Avatars: AI-Powered Meeting Attendance

HeyGen has unveiled a potentially game-changing technology called Interactive Avatars. This system aims to create AI-powered digital representations of individuals that can attend meetings and classes on their behalf.

The technology works by training an AI on an individual's face, voice, knowledge, and communication style. The resulting avatar can then participate in video calls, responding to questions and engaging in discussions as if it were the person it represents.

While the full capabilities and limitations of this technology are still being explored, it raises interesting questions about the future of remote work and education. The potential for individuals to "attend" multiple engagements simultaneously could revolutionize how we approach scheduling and time management.

Meta's CoTracker3: Advanced Video Element Tracking

Meta has released new AI research for CoTracker3, a model designed to improve the tracking of individual elements within video footage. This technology allows for more precise tracking of multiple points on moving objects or people in 3D space.

CoTracker3 has potential applications in various fields, including:

- Video editing and visual effects

- Motion capture for animation and gaming

- Sports analysis and performance tracking

- Augmented reality experiences

The improved tracking capabilities could lead to more realistic visual effects, smoother augmented reality overlays, and more accurate motion analysis in various industries.

Meta and Blumhouse: AI in Filmmaking

Meta has partnered with Blumhouse Productions to test AI in short film production. This collaboration involves using Meta's MovieGen AI tool with filmmakers such as Casey Affleck, Anish Gigantti, and the Spurlock sisters.

While details about the specific films are limited, this partnership represents a significant step in the integration of AI into traditional filmmaking processes. It suggests that major players in the entertainment industry are beginning to embrace AI as a creative tool rather than viewing it as a threat.

Nvidia's Nemotron 70B: A Quiet Giant in Language Models

Nvidia has quietly released a new open-source language model called Llama 3.1 Nemotron 70B Instruct. Despite the lack of fanfare, this model has demonstrated impressive capabilities, outperforming many well-known models like GPT-4 Turbo and Claude 3.5 Sonnet in various benchmarks.

The strong performance of this open-source model suggests that the gap between proprietary and open-source AI is narrowing. This development could have significant implications for AI accessibility and democratization.

Mistral's Mini Models: AI for Mobile and Laptop Devices

Mistral AI has introduced new, smaller language models optimized for laptops and phones. These models, named Ministrol 3B and Ministrol 8B, feature large context windows of 128,000 tokens, allowing them to process about 50 pages of text.

The smaller size of these models makes them suitable for on-device inference, potentially enabling AI applications to run directly on mobile phones or laptops without needing to send data to cloud servers. This approach could lead to faster response times and improved privacy in AI applications.

ChatGPT for Windows: Desktop AI Access

OpenAI has expanded the availability of its ChatGPT desktop app to Windows users. Previously only available on Mac, this move brings easier access to ChatGPT for a broader user base. The desktop app offers a familiar interface for ChatGPT users, now conveniently accessible outside of web browsers.

Perplexity Spaces: Enhanced AI Research Tools

Perplexity AI has introduced a new feature called Spaces, which combines the power of large language models with the ability to incorporate custom knowledge bases. Users can create dedicated spaces, choose their preferred AI model, and add custom instructions and context through PDFs and text files.

This feature enhances Perplexity's existing web search and multi-search agent capabilities, allowing for more personalized and context-aware AI interactions. Spaces could be particularly useful for researchers, students, and professionals who need to work with specific datasets or domain knowledge.

NotebookLM Updates: Customizable AI-Generated Content

Google's NotebookLM, a tool for creating AI-assisted content from various sources, has received an update that allows for greater customization of its outputs. Users can now provide specific instructions to the AI when generating podcasts or other content, such as focusing on particular chapters of a book or adopting a specific tone or style.

This increased flexibility makes NotebookLM a more powerful tool for content creators, educators, and researchers who want to leverage AI while maintaining control over the final output.

Dropbox Dash: AI-Powered Universal Search

Dropbox has introduced Dash, an AI-powered universal search feature for teams. This tool enhances the search capabilities within Dropbox, allowing users to find relevant documents and information using natural language queries. Dash's ability to understand context and intent could significantly improve productivity for teams that rely heavily on shared document storage.

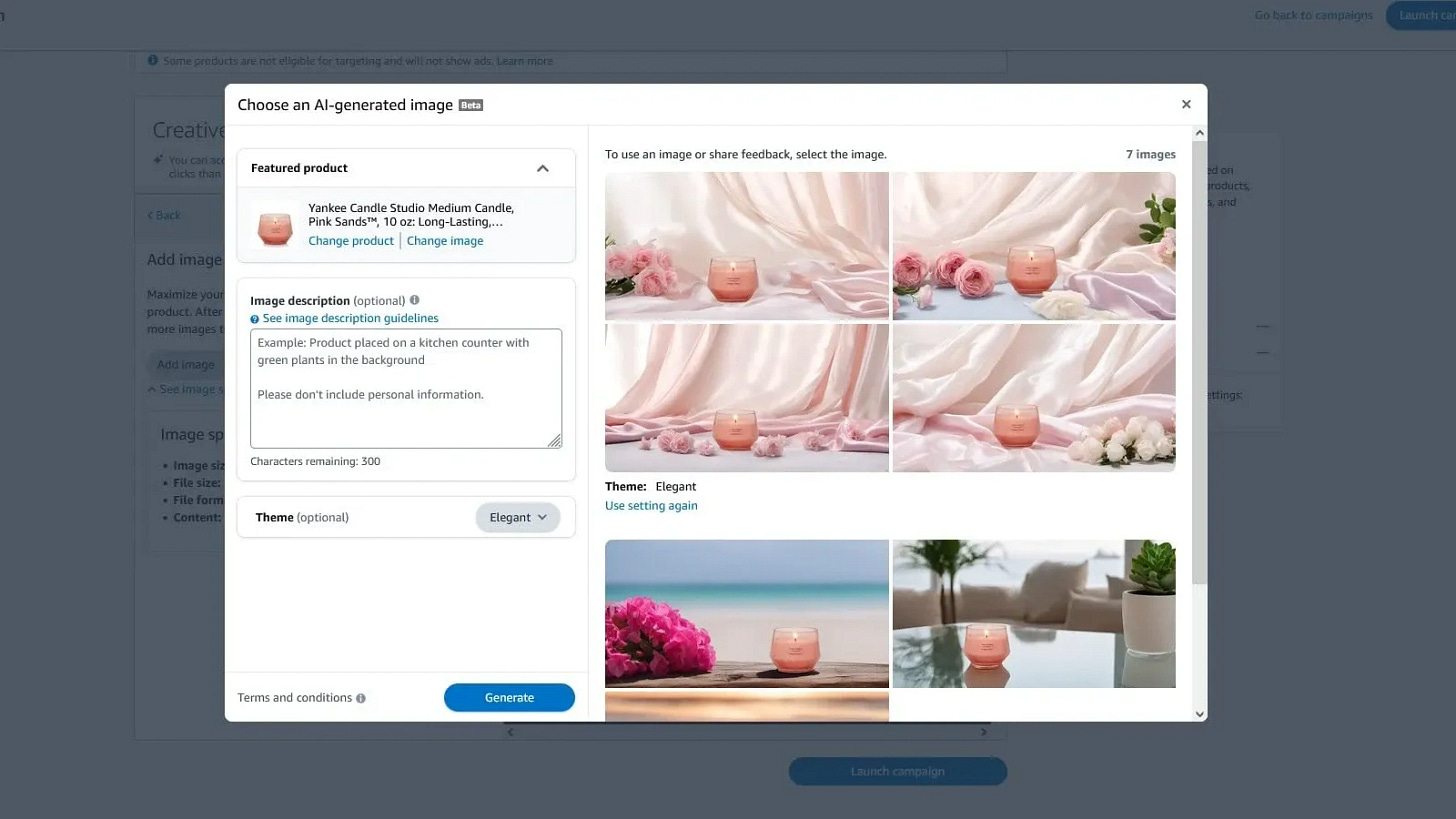

Amazon AI Ads: Enhanced Product Visualization

Amazon has launched a new AI tool for advertisers that can generate enhanced product images and short videos. This technology allows sellers to create more engaging product listings by placing their items in different environments or adding simple animations like steam rising from a coffee mug.

As e-commerce continues to grow, tools like this could help sellers stand out in crowded marketplaces and provide customers with a better sense of products before purchase.

Apple's New iPad: Preparing for Apple Intelligence

Apple has introduced a new iPad Mini that is designed to leverage upcoming "Apple Intelligence" features. While the device itself is similar to previous models, the inclusion of a new chip optimized for AI tasks suggests that Apple is preparing for a significant push into on-device AI processing.

Although the full capabilities of Apple Intelligence are yet to be revealed, this hardware update indicates that Apple is positioning itself to compete in the AI space, potentially bringing advanced AI features to its mobile devices in the near future.

MTech Labs' Emotion-Tracking Smart Glasses

In a move that could revolutionize mental health monitoring and dietary tracking, MTech Labs has unveiled smart glasses embedded with sensors capable of capturing real-time facial expressions. These glasses can detect subtle movements around the eyes, eyebrows, cheeks, and jawline, allowing them to identify expressions of emotion and even track chewing patterns.

The potential applications for this technology include:

1. Mental health monitoring: By tracking facial expressions over time, the glasses could help identify patterns related to mood disorders or stress levels.

2. Dietary management: The ability to detect chewing could assist in preventing overeating and promoting mindful eating habits.

While the consumer version of these glasses is not yet available, MTech Labs plans to release a development kit by December, paving the way for future applications and research in emotion-aware computing.

Conclusion: The Accelerating Pace of AI Innovation

The developments highlighted in this newsletter demonstrate the rapidly accelerating pace of AI innovation across multiple sectors. From creative tools that push the boundaries of what's possible in digital art and video production to AI models that can run on personal devices, we're seeing a shift towards more accessible and powerful AI technologies.

The integration of AI into filmmaking, advertising, and personal computing suggests that we're moving towards a future where AI assistants are commonplace in both professional and personal contexts. However, as these technologies advance, it's crucial to consider the ethical implications, particularly in areas like data privacy and the potential for AI to influence human behavior.

As we continue to witness breakthroughs in AI, it's clear that the technology will play an increasingly significant role in shaping our digital experiences, creative processes, and even our understanding of human emotions. Staying informed about these developments will be essential for professionals across all industries as they navigate the AI-driven future.