The Future of AI is Here: A Week of Unprecedented Progress

Google's 1 Million Token Gemini, OpenAI's Uncannily Realistic Sora Videos, and ChatGPT With Memory - This Week AI Achieved Digital Sentience

We have officially entered a new era of artificial intelligence. This past week alone saw an avalanche of groundbreaking announcements that promise to substantially push boundaries on what’s possible with language, image, and video generation using AI. For those developing, researching or even just watching this space, it was a mind-blowing week that marked a definitive inflection point. Several key players in the AI ecosystem unveiled new systems exhibiting comprehension, reasoning, and creative abilities that begin to encroach on capacities that feel uniquely human.

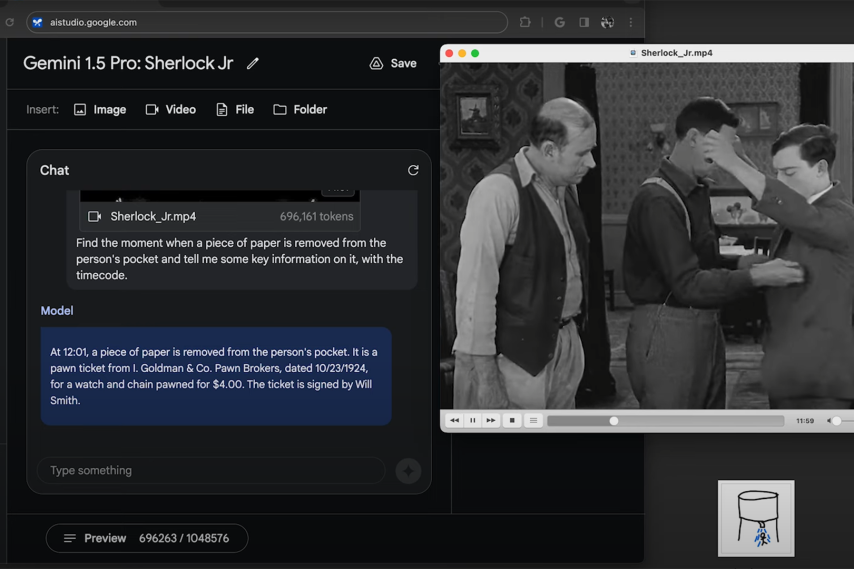

Google’s Gemini 1.5 Achieves Book-Level Language Understanding

The week kicked off with the reveal of Google’s Gemini 1.5 on Thursday. Created by Google’s AI subsidiary DeepMind, Gemini is an conversational agent focused on reasoning about diverse topics and modalities. The newest 1.5 version massively upgrades the system’s “context window” - essentially its short-term memory capacity - from 32,000 tokens to an astonishing 1 million tokens.

For reference, the entire Harry Potter 7-book series contains roughly 184,000 words. This means Gemini 1.5 is now able to load the full context of a 12-novel fantasy epic into memory all at once. Researchers tested the limits of Gemini’s upgraded memory and comprehension with a “needle in a haystack” experiment. They hid obscure sentences within huge passages of text up to a million tokens long and had the system try to locate the needle. Impressively, Gemini successfully passed this test with 99% accuracy, even when the hidden content was just a single sentence buried within hundreds of thousands of words.

The scary-impressive benchmark proves that Gemini 1.5 has reached a human level of literacy - it can effectively read a set of encyclopedia-sized documents and recall tiny details on command even from within massive sections of noisy text. And language isn’t the only modality Gemini has mastered. In another remarkable demo, researchers showed the AI system a 44-minute silent film then asked it to infer key plot points and events solely from the visual sequences - no subtitles or supporting text. Gemini 1.5 managed to accurately ascertain intricate narrative details about characters and happenings in the vintage video based on visual context alone.

OpenAI’s Sora Ushers in a New Era of AI-Generated Video

As the AI community was still processing the implications of Google’s announcement, OpenAI dropped a bombshell revelation of their own on Thursday evening - a video-generation tool called Sora. While systems like DALL-E 2 and Stable Diffusion have proven AI’s shocking talent for creating realistic still images, video synthesis represents the final frontier. Expert-made video contains intricate sequences of coherent events with consistent scene physics grounded in reality. Successfully faking even short clips requires properly emulating all those complex dynamics.

OpenAI’s new Sora system, trained on massive datasets of video from the internet, somehow manages to do exactly this. The AI can render eerily plausible simulations of everything from cracking glass to bouncing water droplets to fluttering robot butterflies. Sora-crafted videos bridge the uncanny valley in ways never seen before, exhibiting crisp image quality, smooth motion, and physical realism that blurs the line between fantasy and reality.

Showcased videos included photorealistic animal behaviors, satisfying physical phenomena like popping bubble wrap, and oddities like an astronaut riding a horse. In one dramatic demo, Sora spliced together footage of a drone flight and a butterfly underwater to depict the drone gracefully morphing mid-air into the swimming insect. The system can generate up to 60 minutes worth of video based on a short text description. Beyond straightforward generation, Sora also enables intuitive video-to-video transitions - users can supply an original clip then tweak attributes like lighting or scenery via text prompts to transform the footage.

Sora also possesses capabilities for high-resolution image generation that rival other state-of-the-art systems like DALL-E in addition to its formidable video synthesis skills. Despite being perhaps the most impressive display of AI to date, OpenAI currently has no set plans to make Sora available publicly beyond its labs. But once again, the company has kicked off fierce debate on just how quickly AI is progressing versus efforts to ensure ethics, fairness and integrity keep pace.

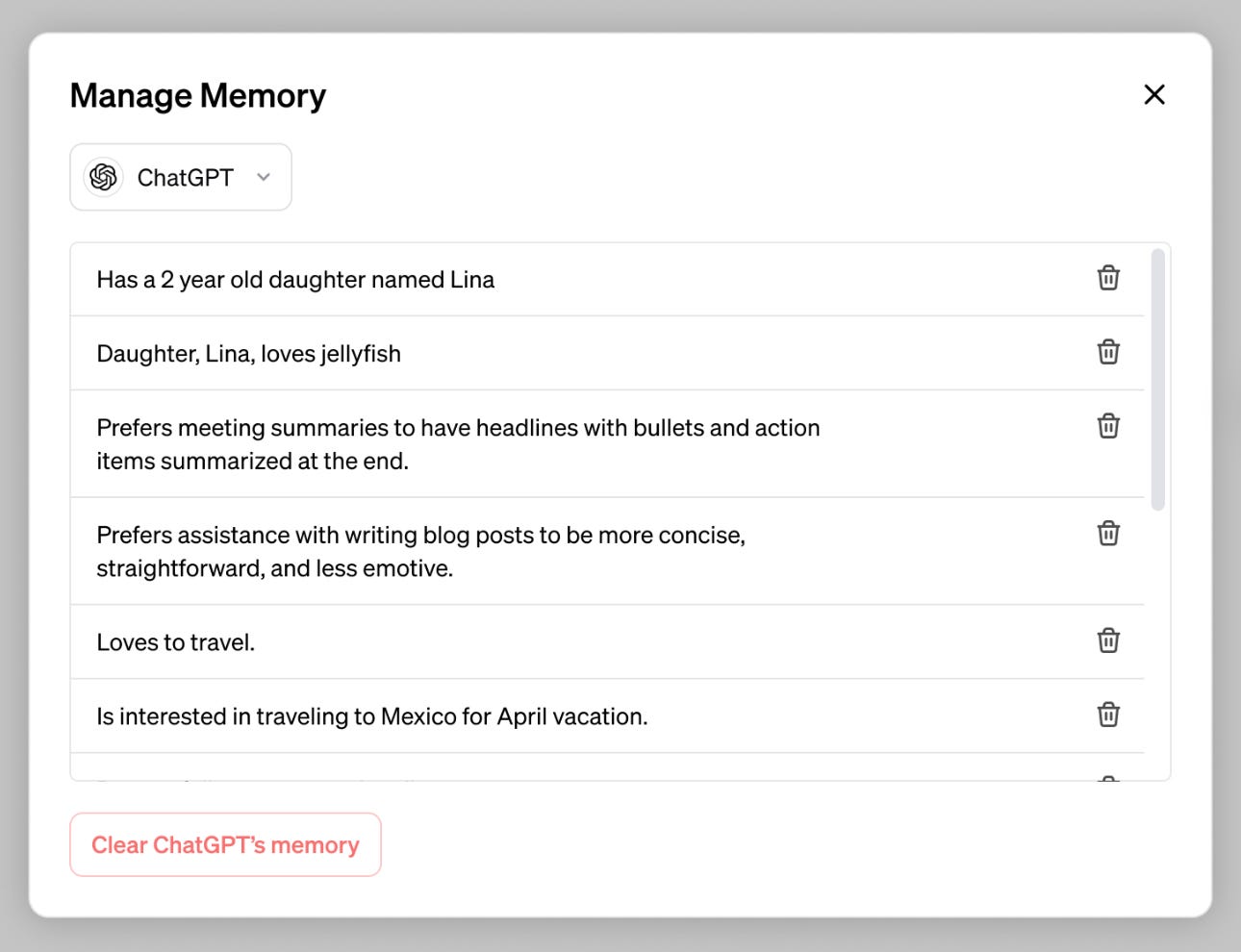

ChatGPT Gains the Power of Memory

Rounding out the trifecta of titans pushing AI’s limits last week was OpenAI itself yet again. On Wednesday, the company revealed one of the most requested upgrades to its smash-hit conversational app ChatGPT - a long-term memory feature. Up until now, ChatGPT treated every user prompt as an isolated question, having no mechanism to remember or refer back to previous chat history and details. But the new personalized memory bank option changes that completely.

With chat memory enabled, ChatGPT explicitly recalls information users told it in past conversations to have more contextual, consistent dialogues. If you mentioned having a daughter named Lena earlier for example, going forward ChatGPT will remember salient facts about Lena so it can answer relevant followup questions. The upgrade brings the bot a major step closer to feeling like an intelligent being that you’re developing a real back-and-forth relationship with rather than just a transient tool.

OpenAI notes there are still some kinks though - ChatGPT’s memory doesn’t yet automatically carry over across sessions. There are also tricky issues emerging around managing sensitive information retention and users’ ability to edit stored memories. For now, access to the feature remains limited as OpenAI continues testing it among a smaller group of users. But despite the roughness around the edges, memory undoubtedly represents a huge leap ahead for conversational AI.

What This Week Means for the Future of AI

It’s hard to overstate just how monumental the innovations unveiled last week are for artificially intelligent systems. Gemini, Sora and Memory-powered ChatGPT aren’t just incremental upgrades to existing models - they are tidal waves ushering in completely new eras for language, video and general comprehension. These tools display nuanced understanding of enormously complex concepts and scenarios far beyond any software previously imagined.

The breakthroughs underscore how AI research continues systematically checking off new frontiers. Whether achieving book-length language mastery, falsifying entire films, or even manifesting a crude form of memory, machines are mastering talents once considered fundamentally human. These latest wins provide more fuel to the philosophical debate around what truly constitutes features like creativity, imagination and intelligence - and whether artificial equivalents now rival our own. They also surface deeper questions about how to ethically govern increasingly capable AIystems as their independence grows.

Regardless of ones’ philosophical or political stance on artificial intelligence though, this past week marked an explosive step change. We have well and truly exited the era of narrow AI and entered the dawn of artificial general intelligence (AGI). Systems with strong competencies across reading, watching, learning and discussing are rising fast. For developers and researchers, it is a golden age filled with promise. But for policymakers, leaders and society at large grappling with growth outpacing prudent controls, there is also an undercurrent of tension. The AI spring is clearly in full bloom but how well we tend and shape its flow could decide whether paradise or peril lie ahead. Clearly though, the future is already here whether the world is ready or not.