The Rise of Deceptive Deepfakes: How an AI-Generated MrBeast Ad Infiltrated TikTok

Deceptive Deepfakes Infiltrate Social Media: How An AI-Generated MrBeast Ad Highlights The Growing Threat Of Manipulated Media

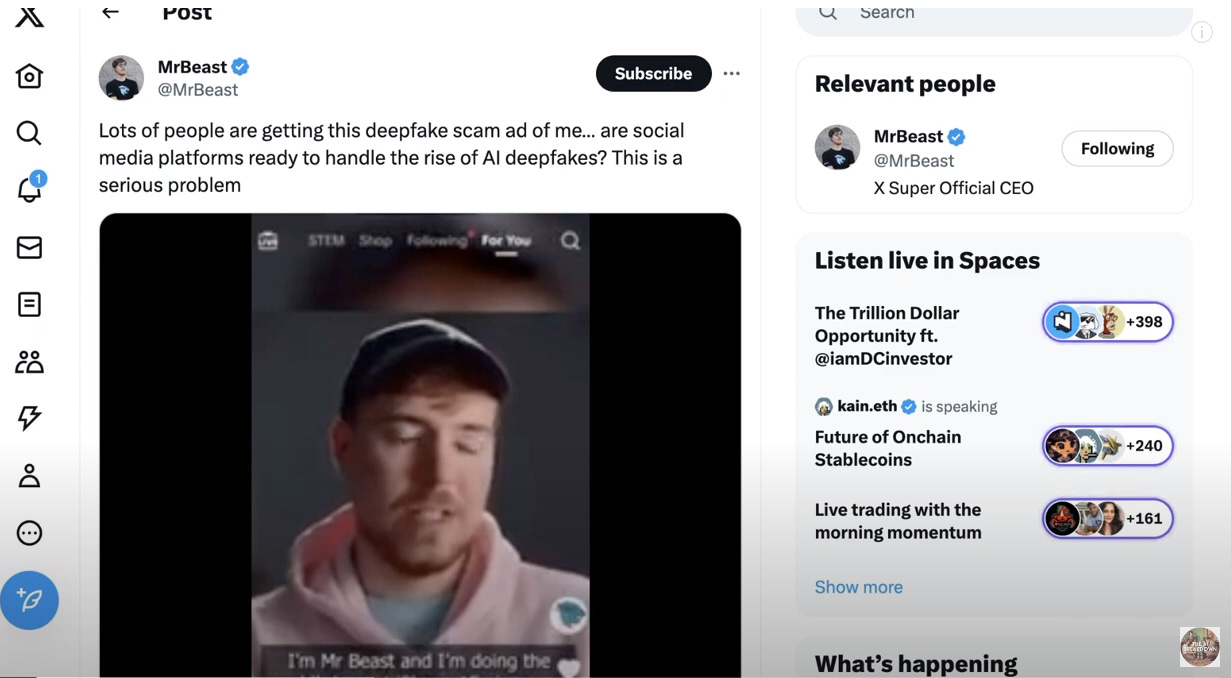

Influencer marketing has always walked a fine line between promotion and deception. But now, with the rise of deepfake technology, a new level of potential fraud has emerged online. This was exemplified by a recent viral TikTok ad falsely depicting YouTube mega-star MrBeast offering viewers $2 iPhones. While TikTok relies on both human moderators and AI to screen content, this adversarial AI-generated deepfake evaded detection, illustrating the growing threat of manipulative synthetic media.

Jimmy “MrBeast” Donaldson has amassed over 111 million YouTube subscribers and billions of views through attention-grabbing stunts and giveaways. In his most popular videos, MrBeast does things like challenge his friends to spend 24 hours trapped on a deserted island or compete for hundreds of thousands of dollars in over-the-top competitions.

Given his reputation for handing out free gaming consoles, cars, and even houses with no strings attached, an ad offering $2 iPhones from MrBeast seems plausible enough to trust. And that’s exactly why deepfake scammers targeted him. They knew his brand and popularity could help convince unsuspecting TikTok users to hand over money or personal data.

The ad features an AI-generated deepfake of MrBeast telling viewers “If you’re seeing this video, you’re one of the 10,000 lucky people who will get an iPhone 15 Pro for just $2.” The deceptive promo matches his typical upbeat speaking style and even includes subtle background music to sell the plausibility.

While TikTok has rules requiring disclosure when ads use manipulated or synthetic media, this regulation was completely ignored. TikTok relies on a combination of human content moderators and AI filtering to catch policy violations before ads go live. But this deepfake's technical sophistication and deceptive realism evaded both human and algorithmic detection.

Within hours after posting, the fraudulent ad did end up removed. But its brief presence still raises concerns about the platform’s ability to combat AI disinformation as deepfake generation becomes increasingly accessible. Corporate policy alone cannot offer a solution if the AI itself is outsmarted.

And MrBeast is far from the only celebrity being exploited in deepfake scams. Over the same week, actor Tom Hanks and news anchor Gayle King also issued warnings about AI-generated ads illegally using their likenesses. Victims of synthetic identity theft now stretch across ages and demographics.

As deepfakes infiltrate social media feeds, even younger generations of internet-savvy users face risks of manipulation. And major platforms like TikTok now face pressure to find technical solutions before deepfakes erode consumer trust and enable large-scale fraud.

The Evolution of Digital Deception

To understand the emerging threat of AI-generated deepfakes, it helps to contextualize how digital deception has evolved over time. Online scammers have long used impersonation and misrepresentation to exploit consumer trust.

In the earliest days of the internet, simple text-based deception dominated. Scammers posed as strangers online to bait victims into disclosing personal information and bank account access. As email grew prominent, phishing scams emerged using spoofed corporate addresses and logos.

The rise of ecommerce then brought a flood of fake retail sites luring shoppers to purchase hot products at amazing discounts. Links spread across forums and social media to Landing Pages with the look and feel of legitimate brands. But after taking payment, the fraudulent sellers simply disappeared.

Next came more advanced techniques like simulating reality through fake reviews. Brand new accounts with AI-generated profile photos would post 5-star raves on Amazon to boost products. Or competitors would flood rivals with 1-star ratings. Without getting tricked into trusting manipulated reviews, it became nearly impossible for shoppers to make informed decisions.

Deepfakes represent the latest evolution of hyper-realistic deception. Powered by AI, forged identities can now be brought to life. Realistic depictions of celebrities and influencers like MrBeast enable scammers to exploit their fame and credibility. Even seeing is no longer believing.

How Deep Learning Enables Deception

The AI behind modern deepfake technology is known as generative adversarial networks, or GANs. GANs use two competing neural networks - one generating content and the other evaluating how realistic it looks.

The generator tries creating images, audio, and video to mimic source data as closely as possible. Then the discriminator analyzes the content to find flaws revealing it's fake. This constantly escalating battle drives the generator to improve until it can reliably fool the discriminator’s detection.

When deployed online, GANs enable creating spoofed media that goes beyond static images. Sophisticated deepfakes can now generate fake videos depicting influential figures delivering custom scripts. The AI composes fresh frames, stitches footage together, and even synthesizes vocal patterns mimicking a targeted individual’s voice and speech patterns.

While deep learning can be used for creative pursuits like generating new art, music, and entertainment, it also offers criminals and scammers ways to create highly deceptive content. Even tech experts struggle distinguishing which videos are real versus AI fabrications. So for average social media users, deepfakes pose a supremely challenging deception to spot.

Deepfakes Go Mainstream

Once dismissed as too complex for real-world use, deepfake technology is now fully mainstream. The transistor and photoshop sparked previous generations of digital deception - deep learning has brought the state of the art to unbelievable realism.

Rapid improvements in algorithms, training techniques, and computing power have lowered the barriers tremendously. Generating convincing deepfakes no longer requires elite AI expertise and research budgets. High-quality results are achievable on consumer hardware like desktop GPUs.

Inevitably, these exponential gains have moved deepfakes out of academic computer labs into the wild. Online communities formed to collect datasets and share methods for forging pornographic content and celebrity videos. But tech giants initially struggled with how to moderate this content given potential limits on free speech.

Of course, it didn’t take long for scammers to recognize the criminal potential. VentureBeat wrote about the rise of GAN crime as deepfakes were used for fraud, phishing, impersonation, and extortion. But ad policies remained reactive and unable to curb new forms of AI-powered deception.

Until recently, deepfake ads were mainly limited to sketchy forums and illegal Dark Web marketplaces. But the MrBeast TikTok scam illustrates how the technology has now graduated to mainstream platforms. Despite bans, deepfakes are proliferating faster than they can be identified and removed.

Is It Too Late to Stop Deepfakes?

The question now is whether the battle against manipulated media is already lost. Have deepfakes grown too ubiquitous to ever contain? Some experts argue limits must be placed on AI research and access to protect society. But similar calls to control the early internet went largely unheeded.

David Carroll, an AI professor at Parsons School of Design, told Wired that “the cat’s already out of the bag” when it comes to limiting deepfake technology. At this point, any individual or group motivated to create deepfakes will find community resources to achieve their goals.

Until recently, Big Tech could at least place limits around how artificial media could spread through content moderation and platform bans. But now generative AI models like Stable Diffusion enable users to create deepfakes directly on consumer devices. There is no upload filter if you can simply generate it locally.

With affordable innovation outpacing regulation, deepfakes seamlessly blend truth and lies. UCLA law professor Eugene Volokh suggests accepting our inability to entirely eliminate them, but focusing protection on identifiable individuals victimized through forged media.

Other experts suggest stronger requirements around disclosure and watermarking. But as seen with the TikTok ad, policies mean little if the content itself appears authentic. MrBeast claims even he cannot discern his real videos from the deepfakes. When AI can fool AI, trust becomes subjective.

Building Better Detection

While the arms race between deepfake creation and detection may seem hopeless, improved solutions are emerging. It just requires moving beyond expectations that static analysis of finished works can reveal absolute truths.

Instead, DARPA is researching dynamic "media forensics" that examine the actual generation process for signs of manipulation. By analyzing the DNA of media as it's created rather than just the finished product, deception can be revealed.

There are also creative calls to add "noise" - intentional distortions that don't affect human perceptions - but prevent media from appearing fully natural. If generation methods leave detectable fingerprints, media authenticity becomes statistically measurable.

Analysis of social patterns around media can provide further clues regarding manipulated content. Carnegie Mellon researchers found AI-generated deepfakes lack the unique metadata of genuine user uploads. Analysing associated metadata helps separate the real from the fake.

No single solution will quantify truth in media with complete certainty. But combining probabilistic forensic analysis, audio-visual artifacts, metadata patterns, and threat-modeling of bad actors can compute highly confident assessments.

Until deepfake detection becomes on par with state-of-the-art generation, the public will need to view content with an increasingly skeptical eye. The MrBeast stunt may seem obvious in retrospect, but hindsight is 20/20. With sufficient context and desire for something to be true, even savvy social media users can be temporarily fooled.

Platforms face growing public pressure to take responsibility given their role in amplifying manipulated content. But both policies and technology will need ongoing refinement to keep pace with subversive AI systems purpose-built to fool human reviewers. Without fundamental improvements in media analysis and authentication, today's state-of-the-art deepfakes may someday seem like child's play compared to what comes next.