The World of AI Continues to Rapidly Evolve

Major Advances in Generative Models, Multimodal AI, Open Source Projects, Responsible AI Efforts, and More

The field of artificial intelligence continues to see huge advancements, with major players like Google, Microsoft, Amazon and Apple making big moves. Here's a look at some of the latest updates in the world of AI.

Amazon and Google Focus on AI for Advertising

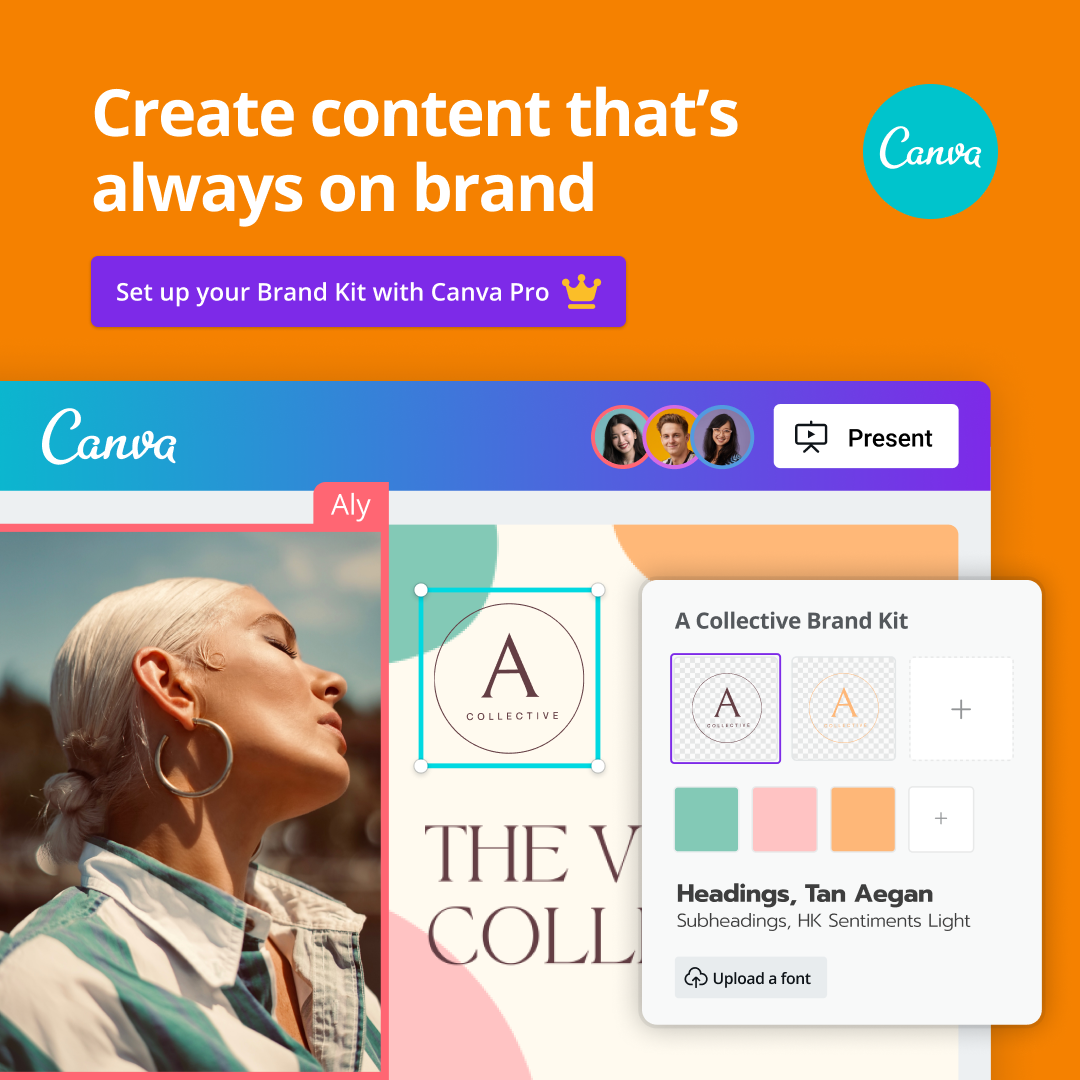

Both Amazon and Google are leveraging AI to improve advertisements. Amazon rolled out an AI-powered image generation tool to help sellers create better visual ads. The tool can take a generic product image and apply a specific background to make it stand out. This allows Amazon advertisers to quickly generate high-quality, eye-catching images.

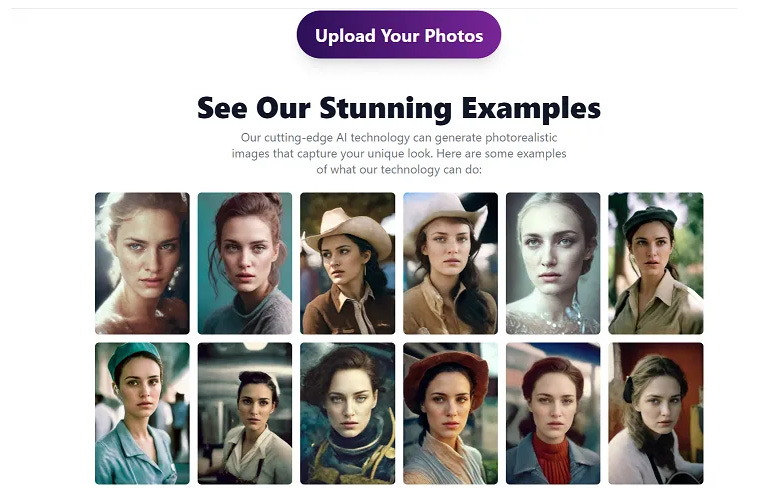

Meanwhile, Google announced a new "About This Image" feature to help users fact check images. It shows an image's origins, metadata, and how it's been described on different sites. This provides context to help debunk false claims and detect AI-generated images. Any images created by Google's own AI will also be properly labeled.

These developments indicate that generative AI will become increasingly common in advertising. However, challenges remain around responsible usage and clarifying source material.

Advances in Open Source AI Art

The open source AI art space saw big strides. An artist released Nightshade, a tool that subtly corrupts training data to "poison" AI models. It causes unpredictable glitches in models trained with Nightshade data. While clever, this cat-and-mouse game will likely diminish as AI relies more on ethically-sourced training data.

In more constructive news, Common Canvas launched - an open source diffusion model trained on Creative Commons images. While not as advanced as leading models, it provides a path to train quality AI with public domain content.

Open source model Stable Diffusion also got an upgrade. The new Stable Diffusion 1B retains the original's quality in a smaller, faster package. And with the Latent Consistency model, Stable Diffusion can generate images on an M1/M2 Mac in under a second,rivaling proprietary services.

These developments highlight the rapid pace of open source AI. Small teams are producing models compete with well-funded startups, all without cost to users.

Multimodal AI Models Gain New Abilities

Multimodal AI systems that combine vision, language and other modalities also made progress. Google research project Woodpecker reduced dangerous hallucinations in these models by 80%. It automatically checks captions against images to correct errors. This demonstrates how existing models can be retrofitted to improve reliability.

In entertainment, a robot tour guide leveraged computer vision and chatGPT-like conversational capabilities. The integration was an impressive showcase of embodied AI's potential.

However, infamous AI critic Bill Gates believes multimodal systems may have plateaued. He set low expectations for future leaps in models like GPT-5. But many experts disagree given the field's pace of change.

Large Language Models Become More Capable

In the large language model space, an open source 7B parameter model called Zipper outperformed the leading open source options. Despite being 14x smaller, it edged out some models over 70B parameters in benchmarks.

OpenAI also formed an internal team to manage risks of language models. The "preparedness team" will study dangers of misuse and how to safeguard models. Combined with their external AI safety forum and research funding, OpenAI is ramping up efforts to ensure responsible AI development.

These events display open source's ability to create highly efficient AI with limited resources. They also highlight that while advanced models like GPT-4 have raised concerns, most companies are taking active steps to address ethical AI.

Big Tech Targets AI Chip Improvements

On the hardware front, leaks suggest Qualcomm's upcoming Snapdragon chip focuses on AI and generative models. Apple is also purportedly developing new silicon optimized for AI. Many expect 2023 chips from Qualcomm and Apple to outpace competitors in AI applications.

Additionally, Nvidia announced improvements to its AI supercomputer. The updated DGX SuperPOD architecture leverages new networking tech to enable training models with over 20 billion parameters. Capabilities like this will further the reach of massive AI models.

Advancing AI specialized hardware will expand what's possible with neural networks. But it also raises concerns about computational arms races and barriers to entry for smaller players.

Tools Emerge to Run Cutting-Edge AI Locally

On a more democratic note, new techniques are allowing advanced AI to run efficiently on personal devices.

Stable Diffusion distilled models can generate images close to the full version using as little as 3GB of VRAM. This could enable sota AI art on readily available GPUs.

Similarly, a Mac-optimized version of Stable Diffusion harnesses Apple silicon to deliver under one second image generations. This beats the speed of many online services.

These developments lower barriers to state-of-the-art AI, allowing everyday users to access models once limited to big tech players.

Ethical Considerations Around AI Art Persist

Discussion continues around the implications of AI art. While some view it as harmless novelty, others see legitimate concerns about copyright, attribution and the role of human creatives.

A new study found that AI artStyles perpetuate stereotypes like gender and race bias. It underscores the need for thoughtful training data curation.

Some musicians also worry about voice replication. Reports suggest YouTube seeks deals to license artist voices for its rumored AI music tool. But agreements remain elusive as questions loom about likeness rights.

These tensions will likely persist. But initiatives like Creative Commons datasets demonstrate paths to train AI more ethically.

Steady Improvements in AI Video Generation

AI video generation also saw incremental advances. Google Brain research produced high-quality, adjustable resolution videos without the need for initial VAE training. While not yet cutting-edge, it's a notable improvement to efficiency.

Facebook AI project GenMo upgraded its Replay model with smoother image-to-video capabilities. Independent project Pabs shared a demo of its beta video model rendering detailed water simulations.

Startups continue racing to reach new milestones in resolution, length and photorealism. But major challenges remain before AI video becomes truly versatile.

Progress Continues in Conversational AI

ChatGPT alternatives also evolved, like Claude - Anthropic's more constrained conversational agent aiming to reduce harmful misinformation.

On the open source front, zyper 7B brought impressive benchmarks. Despite being tiny by industry standards, it neared state-of-the-art proprietary models in evaluations.

These keep expectations high that near human-level conversational AI is on the horizon. However, challenges remain in correcting model mistakes and preventing toxicity.

Initiatives Seek to Make AI Development More Inclusive

On the social impact side, organizations launched programs to democratize access to AI. India's government opened a platform offering students free natural language processing resources. Google also announced a new 10 million person digital skills initiative aimed at expanding access to AI education.

These signal positive trends in spreading AI literacy more broadly. If done responsibly, enabling more people to develop AI could lead to more diverse perspectives.

Overall, progress across the AI landscape continues at a torrid pace. As models become more powerful and accessible, managing risks remains crucial. But with prudent governance, AI breakthroughs could also unlock solutions to many global problems. The path forward requires nurturing innovation while centering ethics, transparency and accessibility.